| Tip | ||

|---|---|---|

| ||

Use our summer school reservation (CoreNGS-Tue) when submitting batch jobs to get higher priority on the ls6 normal queue today:

Note that the reservation name (CoreNGS-Tue) is different from the TACC allocation/project for this class, which is OTH21164. |

| Table of Contents |

|---|

| Anchor | ||||

|---|---|---|---|---|

|

When you SSH into ls6, your session is assigned to one of a small set of login nodes (also called head nodes). These are separate from the cluster compute nodes that will run your jobs.

Think of a TACC node as a computer, like your laptop, but probably with more cores and memory. Now multiply that computer a thousand or more, and you have a cluster.

...

The small set of login nodes are a shared resource (– type the users command to see everyone currently logged in) and . Login nodes are not meant for running interactive programs – for that you submit a description of what you want done to a batch system, which distributes the work to one or more compute nodes.

On the other hand, the login nodes are intended for copying files to and from TACC, so they have a lot of network bandwidth while compute nodes have limited network bandwidth.

...

- Do not perform substantial computation on the login nodes.

- They are closely monitored, and you will get warnings warnings – or worse – from the TACC admin folks!

- Code is usually developed and tested somewhere other than TACC, and only moved over when pretty solid.

- Do not perform significant network Do not perform significant network access from your batch jobs.

- Instead, stage your data from a login node onto $SCRATCH before submitting your job.

So what about developing and testing your code and workflows? Initial code development is often performed elsewhere, then migrated to TACC once is is relatively stable. But for local testing and troubleshooting, TACC offers interactive development (idev) nodes. We'll be taking advantage of idev nodes later on.

Lonestar6 and

...

Stampede3 overview and comparison

Here is a comparison of the configurations and ls6 and stampede2. As you can see, stampede2 stampede3. stampede3 is the newer (and larger) cluster, just launched in 2017, but ls6, launched om 2022, has fewer but more powerful nodes.

...

2024; ls6 was launched in 2022.

| ls6 | stampede3 | |||

|---|---|---|---|---|

| login nodes | 3 128 cores each | 64 28 96 cores each | ||

| standard compute nodes | 560 AMD Epyc 7763 "Milan processors" nodes

4,200 KNL (Knights Landing) processors 68 | 560 Intel Xeon "Sapphire Rapids" nodes

1,736 SKX (Skylake) processors1060 Intel Platinum 8160 "Skylake" nodes

224 Intel Xenon Platinum 8380 "Ice Lake" nodes

| ||

| GPU nodes | 16 88 AMD Epyc 7763 "Milan processors" nodes 128 cores per nodnode 2x 84 GPU nodes have | -- | batch each 4 GPU nodes have | 20 GPU Max 1550 "Ponte Vecchio" nodes 96 cores per node 4x Intel GPU Max 1550 GPUs |

| batch system | SLURM | SLURM | ||

| maximum job run time | 48 hours, normal queue 2 hours, development queue96 hours on KNL nodes, normal queue | 48 hours on SKX GPU nodes, normal queue 2 hours, development queue |

...

24 hours on other nodes, normal queue 2 hours, development queue |

User guides for ls6 and stampede2 stampede3 can be found at:

- https://portaldocs.tacc.utexas.edu/user-guideshpc/lonestar6/

- https://portaldocs.tacc.utexas.edu/user-guideshpc/stampede2stampede3

Unfortunately, the TACC user guides are aimed towards a different user community – the weather modelers and aerodynamic flow simulators who need very fast matrix manipulation and other High Performance Computing (HPC) features. The usage patterns for bioinformatics – generally running 3rd party tools on many different datasets – is rather a special case for HPC. TACC calls our type of processing "parameter sweep jobs" and has a special process for running them, using their launcher module.

...

When you type in the name of an arbitrary program (ls for example), how does the shell know where to find that program? The answer is your $PATH. $PATH is a predefined environment variable whose value is a list of directories.The shell looks for program names in that list, in the order the directories appear.

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

# first type "singularity" to show that it is not present in your environment:

singularity

# it's not on your $PATH either:

which singularity

# now add biocontainers to your environment and try again:

module load biocontainers

# and see how singularity is now on your $PATH:

which singularity

# you can see the new directory at the front of $PATH

echo $PATH

# to remove it, use "unload"

module unload biocontainers

singularity

# gone from $PATH again...

which singularity |

Note that apptainer is another name for singularity.

TACC BioContainers modules

It is quite a large systems administration task to install software at TACC and configure it for the module system. As a result, TACC was always behind in making important bioinformatics software available. To address this problem, TACC moved to providing bioinformatics software via containers, which are similar to virtual machines like VMware and Virtual Box, but are lighter weight: they require less disk space because they rely more on the host's base Linux environment. Specifically, TACC (and many other HPC/High Performance Computing clusters) use Singularity containers, which are similar to Docker containers but are more suited to the HPC environment (environment – in fact one can build a Docker container then easily convert it to Singularity for use at TACC).

TACC obtains its containers from BioContainers (https://biocontainers.pro/ and https://github.com/BioContainers/containers), a large public repository of bioinformatics tool Singularity containers. This has allowed TACC to easily provision thousands of such tools!

...

Note that loading a BioContainer does not add anything to your $PATH. Instead, it defines an alias, which is just a shortcut for executing the command using its container. You can see the alias definition using the type command. And you can ensure the program is available using the command -v utility.

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

ls -l ~/local/bin | ||||

| Expand | ||||

| title | Where is the real# this will tell you the real location of the launcher_creator.py | script?/work2script is # /work/projects/BioITeam/common/bin/launcher_creator.py |

| Warning | ||

|---|---|---|

| ||

Remember that the order of locations in the $PATH environment variable is the order in which the locations will be searched. |

...

Job Execution

Job execution is controlled by the SLURM batch system on both stampede2 stampede3 and ls6.

To run a job you prepare 2 files:

...

Here are the main components of the SLURM batch system.

| stampede2stampede3, ls5 | |

|---|---|

| batch system | SLURM |

| batch control file name | <job_name>.slurm |

| job submission command | sbatch <job_name>.slurm |

| job monitoring command | showq -u |

| job stop command | scancel -n <job name> |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

cds mkdir -p $SCRATCH/core_ngs/slurm/simple cd $SCRATCH/core_ngs/slurm/simple cp $CORENGS/tacc/simple.cmds . |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

launcher_creator.py -j simple.cmds -n simple -t 00:01:00 -a OTH21164TRA23004 -q development |

You should see output something like the following, and you should see a simple.slurm batch submission file in the current directory.

| Code Block |

|---|

Project simple. Using job file simple.cmds. Using normaldevelopment queue. For 00:01:00 time. Using OTH21164TRA23004 allocation. Not sending start/stop email. Launcher successfully created. Type "sbatch simple.slurm" to queue your job. |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

sbatch simple.slurm

showq -u

# Output looks something like this:

-------------------------------------------------------------

Welcome to the Lonestar6 Supercomputer

-------------------------------------------------------------

--> Verifying valid submit host (login1login2)...OK

--> Verifying valid jobname...OK

--> Verifying valid ssh keys...OK

--> Verifying access to desired queue (normal)...OK

--> Checking available allocation (OTH21164TRA23004)...OK

Submitted batch job 2325421722779

|

The queue status will show your job as ACTIVE while its running, or WAITING if not.

| Code Block |

|---|

SUMMARY OF JOBS FOR USER: <abattenh> ACTIVE JOBS-------------------- JOBID JOBNAME USERNAME STATE NODES REMAINING STARTTIME ================================================================================ 9249651722779 simple abattenh Running 1 0:00:4239 Sat Jun 31 21:3355:3128 WAITING JOBS------------------------ JOBID JOBNAME USERNAME STATE NODES WCLIMIT QUEUETIME ================================================================================ Total Jobs: 1 Active Jobs: 1 Idle Jobs: 0 Blocked Jobs: 0 |

...

Notice in my queue status, where the STATE is Running, there is only one node assigned. Why is this, since there were 8 tasks?

...

But here's a cute trick for viewing the contents all your output files at once, using the cat command and filename wildcarding.

| Code Block | ||||

|---|---|---|---|---|

| ||||

cat cmd*.log |

The cat command actually takes can take a list of one or more files (if you're giving it files rather than standard input – more on this shortly) and outputs the concatenation of them to standard output. The asterisk ( * ) in cmd*.log is a multi-character wildcard that matches any filename starting with cmd then ending with .log. So it would match cmd_hello_world.log.

You can also specify single-character matches inside brackets ( [ ] ) in either of the ways below, this time using the ls command so you can better see what is matching:

...

This technique is sometimes called filename globbing, and the pattern a glob. Don't ask me why – it's a Unix thing. Globbing – translating a glob pattern into a list of files – is one of the handy thing the bash shell does for you. (Read more about Pathname wildcards)

Exercise: How would you list all files starting with "simple"?

...

| Code Block |

|---|

Command 1 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:5047 CDT 20232024 Command 2 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:4447 CDT 20232024 Command 3 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:4651 CDT 20232024 Command 4 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:4748 CDT 20232024 Command 5 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:5145 CDT 20232024 Command 6 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:4749 CDT 20232024 Command 7 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:51 CDT 20232024 Command 8 on c304-005.ls6.tacc.utexas.edu - Sat Jun 31 21:3355:4948 CDT 20232024 |

echo

Lets take a closer look at a typical task in the simple.cmds file.

| Code Block | ||||

|---|---|---|---|---|

| ||||

sleep 5; echo "Command 3 `date`" > cmd3.log 2>&1 |

...

So what is this funny looking `date` bit doing? Well, date is just another Linux command (try just typing it in) that just displays the current date and time. Here we don't want the shell to put the string "date" in the output, we want it to execute the date command and put the result text into the output. The backquotes ( ` ` also called backticks) around the date command tell the shell we want that command executed and its standard output substituted into the string. (Read more about Quoting in the shell.)

| Code Block | ||||

|---|---|---|---|---|

| ||||

# These are equivalent: date echo `date` # But different from this: echo date |

...

There's still more to learn from one of our simple tasks, something called output redirection:

...

| language | bash |

|---|

...

.

...

Every command and Unix program has three "built-in" streams: standard input, standard output and standard error, each with a name, a number, and a redirection syntax.

...

Normally echo writes its string to standard output, but it could encounter an error and write an error message to standard error. We want both standard output and standard error for each task stored in a log file named for the command number.

| Code Block | ||||

|---|---|---|---|---|

| ||||

sleep 5; echo "Command 3 `date`" > cmd3.log 2>&1 |

So in the above example the first '>' says to redirect the standard output of the echo command to the cmd3.log file.

The '2>&1' part says to redirect standard error to the same place. Technically, it says to redirect standard error (built-in Linux stream 2) to the same place as standard output (built-in Linux stream 1); and since standard output is going to cmd3.log, any standard error will go there also. (Read more about Standard streams and redirection)

When the TACC batch system runs a job, all outputs generated by tasks in the batch job are directed to one output and error file per job. Here they have names like simple.e924965 and simple.o924965. simple.o924965 contains all standard output and simple.o924965 contains all standard error generated by your tasks that was not redirected elsewhere, as well as information relating to running your job and its tasks. For large jobs with complex tasks, it is not easy to troubleshoot execution problems using these files.

So a best practice is to separate the outputs of each of all our the tasks into an individual log filesfile, one per task, as we do here. Why is this important? Suppose we run a job with 100 commands, each one a whole pipeline (alignment, for example). 88 finish fine but 12 do not. Just try figuring out which ones had the errors, and where the errors occurred, if all the standard output is in one intermingled file and all standard error in the other intermingled file!

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

launcher_creator.py -j simple.cmds -n simple -t 00:01:00 -a OTH21164TRA23004 -q development |

- The name of your commands file is given with the -j simple.cmds option.

- Your desired job name is given with the -n simple option.

- The <job_name> (here simple) is the job name you will see in your queue.

- By default a corresponding <job_name>.slurm batch file is created for you.

- It contains the name of the commands file that the batch system will execute.

...

TACC resources are partitioned into queues: a named set of compute nodes with different characteristics. The main ones on ls6 are listed below. Generally you use development (-q development) when you are writing and testing first test your code, then normal once you're sure your commands will execute properly.

...

| Expand | |||||||

|---|---|---|---|---|---|---|---|

| |||||||

The .bashrc login script you've installed for this course specifies the class's allocation as shown below. Note that this allocation will expire after the course, so you should change that setting appropriately at some point.

|

- When you run a batch job, your project allocation gets "charged" for the time your job runs, in the currency of SUs (System Units).

- SUs are related in some way to node hours, usually 1 SU = 1 "standard" node hour.

| Tip | ||

|---|---|---|

| ||

Jobs should consist of tasks that will run for approximately the same length of time. This is because the total node hours for your job is calculated as the run time for your longest running task (the one that finishes last). For example, if you specify 100 commands and 99 finish in 2 seconds but one runs for 24 hours, you'll be charged for 100 x 24 node hours even though the total amount of work performed was only ~24 hours. |

...

| tasks per node (wayness) | cores available to each task | memory available to each task |

|---|---|---|

| 1 | 128 | ~256 GB |

| 2 | 64 | ~128 GB |

| 4 | 32 | ~64 GB |

| 8 | 16 | ~32 GB |

| 16 | 8 | ~16 GB |

| 32 | 4 | ~8 GB |

| 64 | 2 | ~4 GB |

| 128 | 1 | ~1 ~2 GB |

- In launcher_creator.py, wayness is specified by the -w argument.

- the default is 128 (one task per core)

- A special case is when you have only 1 command in your job.

- In that case, it doesn't matter what wayness you request.

- Your job will run on one compute node, and have all cores available.

Your choice of the wayness parameter will depend on the nature of the work you are performing: its computational intensity, its memory requirements and its ability to take advantage of multi-processing /multi-threading (e.g. bwa -t option or hisat2 -p option).

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

# If $CORENGS is not defined: export CORENGS=/work/projects/BioITeam/projects/courses/Core_NGS_Tools cds mkdir -p core_ngs/slurm/wayness cd core_ngs/slurm/wayness cp ~$CORENGS/CoreNGS/tacc/wayness.cmds . |

Exercise: How many tasks are specified in the wayness.cmds file?

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

launcher_creator.py -j wayness.cmds -n wayness -w 4 -t 00:02:00 -a OTH21164TRA23004 -q development sbatch wayness.slurm showq -u |

...

| Code Block | ||

|---|---|---|

| ||

cat cmd*log # or, for a listing ordered by nodecommand namenumber (the 11th2nd space-separated field) cat cmd*log | sort -k 112,112n |

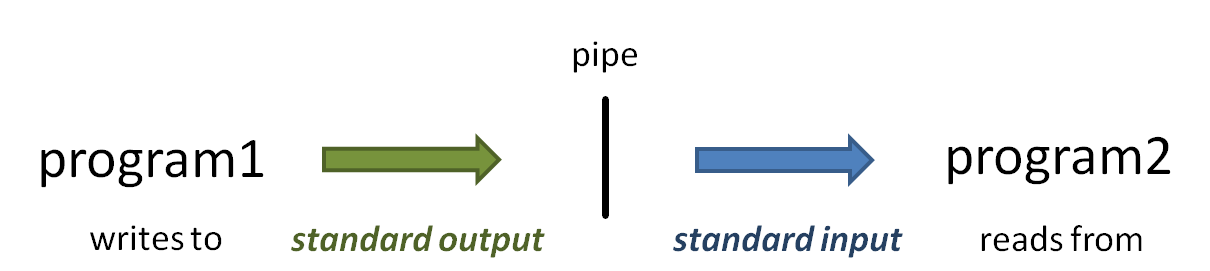

The vertical bar ( | ) above is the pipe operator, which connects one program's standard output to the next program's standard input.

(Read more about the sort command at Some Linux fundamentalscommands: cut, sort, uniq, and more about Piping)

You should see something like output below.

...

Notice that there are 4 different host names. This expression:

| Code Block | ||

|---|---|---|

| ||

| ||

# the host (node) name is in the 11th field

cat cmd*log | awk '{print $11}' | sort | uniq -c |

should produce output something like this (read more about piping commands to make a histogram)

| Code Block | ||

|---|---|---|

| ||

4 c302c303-005.ls6.tacc.utexas.edu 4 c302c303-006.ls6.tacc.utexas.edu 4 c305c304-005.ls6.tacc.utexas.edu 4 c305c304-006.ls6.tacc.utexas.edu |

(Read more about awk in Some Linux commands: awk, and read more about Piping a histogram)

Some best practices

Redirect task output and error streams

...

Here's an example directory structure

$SCRATCH$WORK/my_project

/01.original # contains or links to original fastq files

/02.fastq_prep # run fastq QC and trimming jobs here

/03.alignment # run alignment jobs here

/gene_counts /04.# analyze gene overlap here

/51.test1 # play around with stuff here

/52.test2 # play around with other stuff here

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

cd $SCRATCH$WORK/my_project/02.fastq_prep ls ../01.original/my_raw_sequences.fastq.gz |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

cd $SCRATCH$WORK/my_project/02.fastq_prep ln -ssf ../01.original fq ls ./fq/my_raw_sequences.fastq.gz |

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

# navigate through the symbolic link in your Home directory cd ~scratch~/scratch/core_ngs/slurm/simple ls ../wayness ls ../.. ls -l ~/.bashrc |

And read (Read more about Absolute and relative pathname syntax)

Interactive sessions (idev)

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

idev -m 60 -N 1 -A OTH21164TRA23004 -p normal -r CoreNGS-Tue |

Notes:

- -p normal requests nodes on the normal queue

- this is the default for our reservation, while the development queue is the normal default

- -m 60 asks for a 60 minute session

- -A OTH21164 TRA23004 specifies the TACC allocation/project to use

- -N 1 asks for 1 node

- --reservation=CoreNGS-Tue gives us priority access to TACC nodes for the class. You normally won't use this option.

...

| Code Block |

|---|

-> Checking on the status of development queue. OK -> Defaults file : ~/.idevrc -> System : ls6 -> Queue : development (cmd line: -p ) -> Nodes : 1 (cmd line: -N ) -> Tasks per Node : 128 (Queue default ) -> Time (minutes) : 60 (cmd line: -m ) -> Project : OTH21164TRA23004 (cmd line: -A ) ----------------------------------------------------------------- Welcome to the Lonestar6 Supercomputer ----------------------------------------------------------------- --> Verifying valid submit host (login1)...OK --> Verifying valid jobname...OK --> Verifying valid ssh keys...OK --> Verifying access to desired queue (development)...OK --> Checking available allocation (OTH21164TRA23004)...OK Submitted batch job 235465 -> After your idev job begins to run, a command prompt will appear, -> and you can begin your interactive development session. -> We will report the job status every 4 seconds: (PD=pending, R=running). -> job status: PD -> job status: R -> Job is now running on masternode= c302-005...OK -> Sleeping for 7 seconds...OK -> Checking to make sure your job has initialized an env for you....OK -> Creating interactive terminal session (login) on master node c302-005. -> ssh -Y -o "StrictHostKeyChecking no" c302-005 |

...

- the hostname command on a login node will return a login server name like login3.ls6.tacc.utexas.edu

- while in an idev session hostname returns a compute node name like c303-006.ls6.tacc.utexas.edu

- you cannot submit a batch job from inside an idev session, only from a login node

- your idev session will end when the requested time has expired

- or you can just type exit to return to a login node session

...