...

Use our summer school reservation (CoreNGS-Tue) when submitting batch jobs to get higher priority on the ls6 normal queue today.

| Code Block |

|---|

| language | bash |

|---|

| title | Request an interactive (idev) node |

|---|

|

# Request a 180 minute interactive node on the normal queue using today's reservation

idev -m 180 -N 1 -A OTH21164 -r CoreNGS-Tue

# Request a 120 minute idev node on the development queue

idev -m 120 -N 1 -A OTH21164 -p development |

| Code Block |

|---|

| language | bash |

|---|

| title | Submit a batch job |

|---|

|

# Using today's reservation

sbatch --reseservation=CoreNGS-Tue <batch_file>.slurm |

Note that the reservation name (CoreNGS-Tue) is different from the TACC allocation/project for this class, which is OTH21164.

Compute cluster overview

...

| Code Block |

|---|

| language | bash |

|---|

| title | Create batch submission script for simple commands |

|---|

|

launcher_creator.py -j simple.cmds -n simple -t 00:01:00 -a OTH21164TRA23004 -q development |

You should see output something like the following, and you should see a simple.slurm batch submission file in the current directory.

| Code Block |

|---|

Project simple.

Using job file simple.cmds.

Using development queue.

For 00:01:00 time.

Using OTH21164TRA23004 allocation.

Not sending start/stop email.

Launcher successfully created. Type "sbatch simple.slurm" to queue your job. |

...

| Code Block |

|---|

| language | bash |

|---|

| title | Submit simple job to batch queue |

|---|

|

sbatch simple.slurm

showq -u

# Output looks something like this:

-------------------------------------------------------------

Welcome to the Lonestar6 Supercomputer

-------------------------------------------------------------

--> Verifying valid submit host (login2)...OK

--> Verifying valid jobname...OK

--> Verifying valid ssh keys...OK

--> Verifying access to desired queue (normal)...OK

--> Checking available allocation (OTH21164TRA23004)...OK

Submitted batch job 1722779

|

...

| Code Block |

|---|

| language | bash |

|---|

| title | Create batch submission script for simple commands |

|---|

|

launcher_creator.py -j simple.cmds -n simple -t 00:01:00 -a OTH21164TRA23004 -q development |

- The name of your commands file is given with the -j simple.cmds option.

- Your desired job name is given with the -n simple option.

- The <job_name> (here simple) is the job name you will see in your queue.

- By default a corresponding <job_name>.slurm batch file is created for you.

- It contains the name of the commands file that the batch system will execute.

...

TACC resources are partitioned into queues: a named set of compute nodes with different characteristics. The main ones on ls6 are listed below. Generally you use development (-q development) when you are writing and testing first test your code, then normal once you're sure your commands will execute properly.

...

| Expand |

|---|

| title | Our class ALLOCATION was set in .bashrc |

|---|

|

The .bashrc login script you've installed for this course specifies the class's allocation as shown below. Note that this allocation will expire after the course, so you should change that setting appropriately at some point. | Code Block |

|---|

| language | bash |

|---|

| title | ALLOCATION setting in .bashrc |

|---|

| # This sets the default project allocation for launcher_creator.py

export ALLOCATION=OTH21164TRA23004 |

|

- When you run a batch job, your project allocation gets "charged" for the time your job runs, in the currency of SUs (System Units).

- SUs are related in some way to node hours, usually 1 SU = 1 "standard" node hour.

| Tip |

|---|

| title | Jobs tasks should have similar expected runtimes |

|---|

|

Jobs should consist of tasks that will run for approximately the same length of time. This is because the total node hours for your job is calculated as the run time for your longest running task (the one that finishes last). For example, if you specify 100 commands and 99 finish in 2 seconds but one runs for 24 hours, you'll be charged for 100 x 24 node hours even though the total amount of work performed was only ~24 hours. |

...

| tasks per node (wayness) | cores available to each task | memory available to each task |

|---|

| 1 | 128 | ~256 GB |

| 2 | 64 | ~128 GB |

| 4 | 32 | ~64 GB |

| 8 | 16 | ~32 GB |

| 16 | 8 | ~16 GB |

| 32 | 4 | ~8 GB |

| 64 | 2 | ~4 GB |

| 128 | 1 | ~1 ~2 GB |

- In launcher_creator.py, wayness is specified by the -w argument.

- the default is 128 (one task per core)

- A special case is when you have only 1 command in your job.

- In that case, it doesn't matter what wayness you request.

- Your job will run on one compute node, and have all cores available.

Your choice of the wayness parameter will depend on the nature of the work you are performing: its computational intensity, its memory requirements and its ability to take advantage of multi-processing /multi-threading (e.g. bwa -t option or hisat2 -p option).

...

| Code Block |

|---|

| language | bash |

|---|

| title | Create batch submission script for wayness example |

|---|

|

launcher_creator.py -j wayness.cmds -n wayness -w 4 -t 00:02:00 -a OTH21164TRA23004 -q development

sbatch wayness.slurm

showq -u |

...

| Code Block |

|---|

|

cat cmd*log

# or, for a listing ordered by nodecommand namenumber (the 11th2nd space-separated field)

cat cmd*log | sort -k 112,112n |

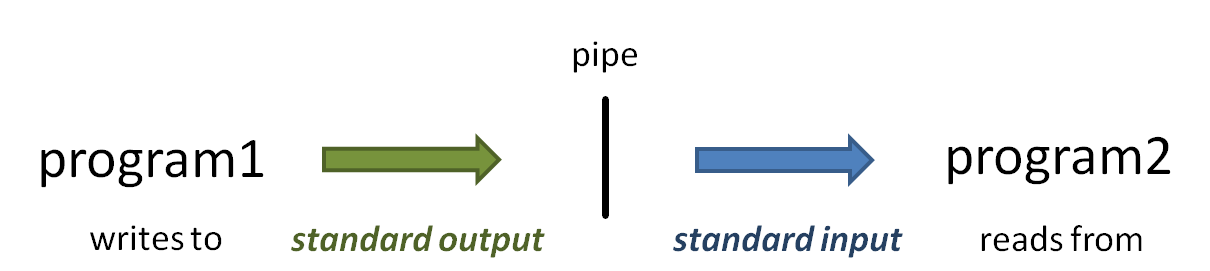

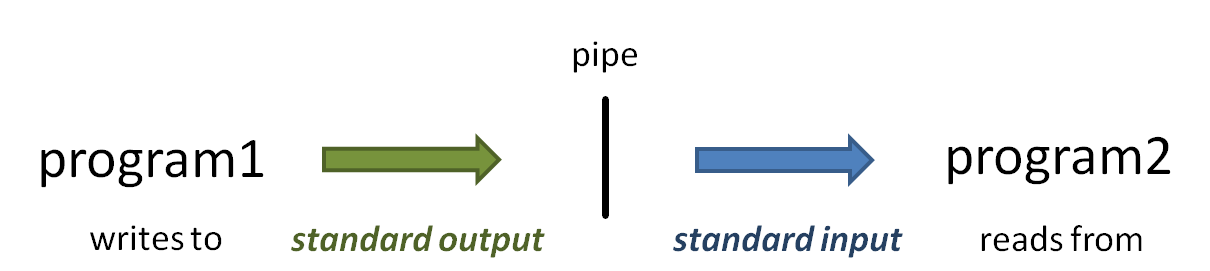

The vertical bar ( | ) above is the pipe operator, which connects one program's standard output to the next program's standard input.

Image Removed

Image Removed Image Added

Image Added

(Read more about the sort command at Some Linux fundamentalscommands: cut, sort, uniq, and more about Piping)

You should see something like output below.

...

Notice that there are 4 different host names. This expression:

...

| Code Block |

|---|

|

# the host (node) name is in the 11th field

cat cmd*log | awk '{print $11}' | sort | uniq -c |

should produce output something like this (read more about piping commands to make a histogram)

| Code Block |

|---|

|

4 c302c303-005.ls6.tacc.utexas.edu

4 c302c303-006.ls6.tacc.utexas.edu

4 c305c304-005.ls6.tacc.utexas.edu

4 c305c304-006.ls6.tacc.utexas.edu |

(Read more about awk in Some Linux commands: awk, and read more about Piping a histogram)

Some best practices

Redirect task output and error streams

...

Here's an example directory structure

$SCRATCH$WORK/my_project

/01.original # contains or links to original fastq files

/02.fastq_prep # run fastq QC and trimming jobs here

/03.alignment # run alignment jobs here

/gene_counts /04.# analyze gene overlap here

/51.test1 # play around with stuff here

/52.test2 # play around with other stuff here

...

| Code Block |

|---|

| language | bash |

|---|

| title | Relative path syntax |

|---|

|

cd $SCRATCH$WORK/my_project/02.fastq_prep

ls ../01.original/my_raw_sequences.fastq.gz |

...

| Code Block |

|---|

| language | bash |

|---|

| title | Symbolic link to relative path |

|---|

|

cd $SCRATCH$WORK/my_project/02.fastq_prep

ln -ssf ../01.original fq

ls ./fq/my_raw_sequences.fastq.gz |

...

| Code Block |

|---|

| language | bash |

|---|

| title | Relative path exercise |

|---|

|

# navigate through the symbolic link in your Home directory

cd ~scratch~/scratch/core_ngs/slurm/simple

ls ../wayness

ls ../..

ls -l ~/.bashrc |

...

| Code Block |

|---|

| language | bash |

|---|

| title | Start an idev session |

|---|

|

idev -m 60 -N 1 -A OTH21164TRA23004 -p normal -r CoreNGS-Tue |

Notes:

- -p normal requests nodes on the normal queue

- this is the default for our reservation, while the development queue is the normal default

- -m 60 asks for a 60 minute session

- -A OTH21164 TRA23004 specifies the TACC allocation/project to use

- -N 1 asks for 1 node

- --reservation=CoreNGS-Tue gives us priority access to TACC nodes for the class. You normally won't use this option.

...

| Code Block |

|---|

-> Checking on the status of development queue. OK

-> Defaults file : ~/.idevrc

-> System : ls6

-> Queue : development (cmd line: -p )

-> Nodes : 1 (cmd line: -N )

-> Tasks per Node : 128 (Queue default )

-> Time (minutes) : 60 (cmd line: -m )

-> Project : OTH21164TRA23004 (cmd line: -A )

-----------------------------------------------------------------

Welcome to the Lonestar6 Supercomputer

-----------------------------------------------------------------

--> Verifying valid submit host (login1)...OK

--> Verifying valid jobname...OK

--> Verifying valid ssh keys...OK

--> Verifying access to desired queue (development)...OK

--> Checking available allocation (OTH21164TRA23004)...OK

Submitted batch job 235465

-> After your idev job begins to run, a command prompt will appear,

-> and you can begin your interactive development session.

-> We will report the job status every 4 seconds: (PD=pending, R=running).

-> job status: PD

-> job status: R

-> Job is now running on masternode= c302-005...OK

-> Sleeping for 7 seconds...OK

-> Checking to make sure your job has initialized an env for you....OK

-> Creating interactive terminal session (login) on master node c302-005.

-> ssh -Y -o "StrictHostKeyChecking no" c302-005 |

...